Introducing Pandas

Pandas is a widely used Python library for data analysis and manipulation. However, as datasets grow, its default operations may not always be the most efficient. To maximize its utility, Pandas requires certain optimizations to enhance performance for larger datasets.

This guide focuses on four key optimization techniques to improve speed and memory efficiency:

- Index Optimization

- Vectorization of Operations

- Memory Optimization

- Filter Optimization

Let’s explore these techniques with practical examples to demonstrate their impact.

1. Index Optimization

Indexes play a vital role in improving the performance of lookups, merges, and data retrievals. Using indexed operations avoids full DataFrame scans and significantly speeds up these tasks, particularly with large datasets.

Key Advantages

- Faster merges and lookups.

- Reduced computational overhead compared to raw column-based operations.

Examples

i. Merging on Index

import pandas as pd

#Sample data for listings

listings_data = {

'listing id': [29844866, 12345678, 87654321, 11223344],

'name': ['Luxury Villa', 'Cozy Apartment', 'Modern Condo', 'Beach House'],

'price': [300, 120, 250, 500],

'location': ['Los Angeles', 'New York', 'San Francisco', 'Miami']

}

Listings = pd.DataFrame(listings_data)

#Sample data for reviews

reviews_data = {

'listing id': [29844866, 12345678, 87654321, 29844866],

'review id': [1, 2, 3, 4],

'rating': [5, 4, 3, 4],

'comments': ['Great place!', 'Nice location', 'Average experience', 'Loved it!']

}

reviews = pd. DataFrame(reviews_data)

Index-based merges are faster than column-based ones, as the index allows Pandas to directly align rows.

#Merging based on column

%timeit listings.merge(reviews, on='listing_id')

#Output: 439 ms ± 24.5 ms per loop

#Merging on index

reviews_ = reviews.set_index('listing_id')

listings_ = listings.set_index('listing_id')

%timeit listings_.merge(reviews_, left_index=True, right_index=True)

#Output: 393 ms ± 17.4 ms per loop

ii. Fast Lookups with Indexes

# Indexed lookup using .at[ ] %timeit listings.at[29844866, 'name'] # Output: 5.34 μs ± 474 ns # Non-indexed lookup %timeit listings.loc[listings['listing_id'] ==29844866, 'name'] # Output: 593 μs ± 30 μs

Using .at[] with indexed data is significantly quicker, avoiding the overhead of filtering and positional indexing.

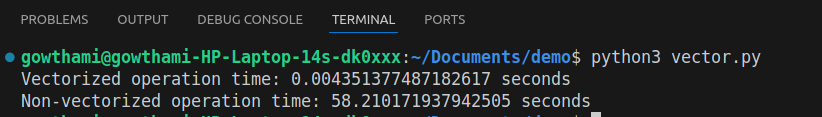

2. Vectorization of Operations

Vectorization is a programming technique where computations are applied to entire arrays or collections of data in a single step, rather than processing individual elements iteratively. This eliminates the need for explicit loops, making the code faster, cleaner, and more efficient. It is especially useful for operations on large datasets, as the underlying libraries like Pandas optimize these operations at the low-level using highly efficient code.

Example: Normalizing a Column

import time

import pandas as pd

# Create a sample dataframe using only pandas

listings = pd.DataFrame({

'price': pd.Series([i for i in range(100000)]) # Creating a sample dataframe with 100,000 rows

})

# Vectorized normalization

start_time_vec = time.time()

listings['normalized_price_vec'] = (listings['price'] - listings['price'].min()) / (listings['price'].max() - listings['price'].min())

end_time_vec = time.time()

# Non-vectorized normalization

start_time_non_vec = time.time()

for i in range(len(listings)):

listings.loc[i, 'normalized_price_non_vec'] = (listings.loc[i, 'price'] - listings['price'].min()) / (listings['price'].max() - listings['price'].min())

end_time_non_vec = time.time()

# Print times

print(f"Vectorized operation time: {end_time_vec - start_time_vec} seconds")

print(f"Non-vectorized operation time: {end_time_non_vec - start_time_non_vec} seconds")

The vectorized approach is not only faster but also more readable.

For complex operations that resist vectorization, consider using NumPy functions or broadcasting, which can still outperform explicit loops.

3. Memory Optimization

Pandas often defaults to large memory footprints when loading data. Columns are usually inferred as int64 or float64, consuming unnecessary memory. Optimizing data types can drastically reduce the memory usage of your DataFrame.

Best Practices for Memory Optimization

i. Downcast Numeric Columns

- Convert integers and floats to their smallest possible data types.

df['int_column'] = pd.to_numeric(df['int_column'], downcast='integer') df['float_column'] = pd.to_numeric(df['float_column'], downcast='float')

ii. Categorical Data Conversion

- Convert string columns with repetitive values into category data types.

df['category_column'] = df['category_column'].astype('category')

Example: Reducing DataFrame Memory Usage

# Initial memory usage

listings.info(memory_usage='deep')

# Function to downcast columns

def optimize_df(df):

for col in df.select_dtypes (include=['int']).columns: df[col] = pd.to_numeric(df[col], downcast='unsigned')

for col in df.select_dtypes (include=['float']).columns: df[col] = pd.to_numeric(df[col], downcast='float')

for col in df.select_dtypes (include=['object']).columns: num_unique = len(df[col].unique())

num_total = len(df[col])

if num_unique / num_total < 0.5:

df[col] = df [col].astype('category')

optimize_df(listings)

# Optimized memory usage

listings.info(memory_usage='deep')

By reducing the size of numeric types and converting categorical data, we significantly reduce the DataFrame's memory usage, making it easier to work with larger datasets without running into memory constraints.

4. Filter Optimization

Efficient filtering minimizes the size of the data being processed in subsequent operations, saving time and memory. Filters should be applied as early as possible in the data pipeline.

Best Practices

i. Apply Filters Before Joins

- Filtering smaller datasets reduces the size of the data involved in joins.

filtered_small_df = small_df[small_df['value'] > 100] merged = large_df.merge(filtered_small_df, on="key_column")

ii. Avoid Iterative Filtering

- Combine conditions in a single filtering operation.

# Inefficient df = df[df['A'] > 10] df = df[df['B'] < 50] # Efficient df = df[(df['A'] > 10) & (df['B'] < 50)]

Example: Efficient Filtering

# Inefficient: Merging first, filtering later merged_df = listings.merge(reviews, on='listing_id') filtered_df = merged_df[merged_df['price'] > 100] # Efficient: Filtering first, then merging filtered_reviews = reviews[reviews['ratings']>4] merged_df = listings.merge(filtered_reviews, on='listing_id')

By filtering the smaller reviews DataFrame before merging, we reduce the size of the data that needs to be joined, improving overall performance.

Conclusion

Pandas is a powerful and versatile library for data analysis, but optimizing its operations is crucial when working with larger datasets. The four techniques discussed—index optimization, vectorization, memory optimization, and filter optimization—can significantly enhance the efficiency of your Pandas workflows.

By incorporating these best practices, you can reduce processing time, lower memory consumption, and build scalable data pipelines. Whether working with small datasets or preparing for large-scale production tasks, these optimizations will ensure your Pandas operations are as effective as possible.